Local-First Conf, Ink Selection with Flux

- July 2024

- Back to archive

We hope you’re having a wonderful summer. At Ink & Switch, we’re hard at work on several new projects which we’ll share more about soon.

In this Dispatch: we have a report from the inaugural Local-First Conference in Berlin, a deep dive from our ink team on a new selection model, and some updates on our researcher-in-residence program.

Trip report: Local-First Conf

The first local-first conference was held in Berlin last month and several lab members were in attendance. For those who aren’t up-to-speed on what’s been happening with the local-first movement, Martin Kleppmann gave the opening keynote and described the Past, Present, and Future of Local-First, and Alex Good presented on his recent work shipping rich-text support in Automerge. There’s now a playlist of videos from the event so you can catch up on the talks you missed. We all particularly enjoyed Maggie Appleton’s closing talk introducing the concept of the Barefoot Developer.

After the main conference there was a second “expo” day of presentations by technology builders:

- Jazz.Tools

- PowerSync

- Fireproof

- Automerge

- DXOS

- ElectricSQL

- and of course, Berlin’s own: Yjs.

It’s incredible to see a thriving community growing around these ideas. Huge thanks to Johannes Schickling, Adam Wiggins, James Arthur, and the Scéal team for putting on a great show.

One last thing: you don’t need to wait a whole year to jump in with the local-first community. Why not check out Johannes’ local-first podcast or join the Local-First Discord?

Deep dive: dynamic selection with flux

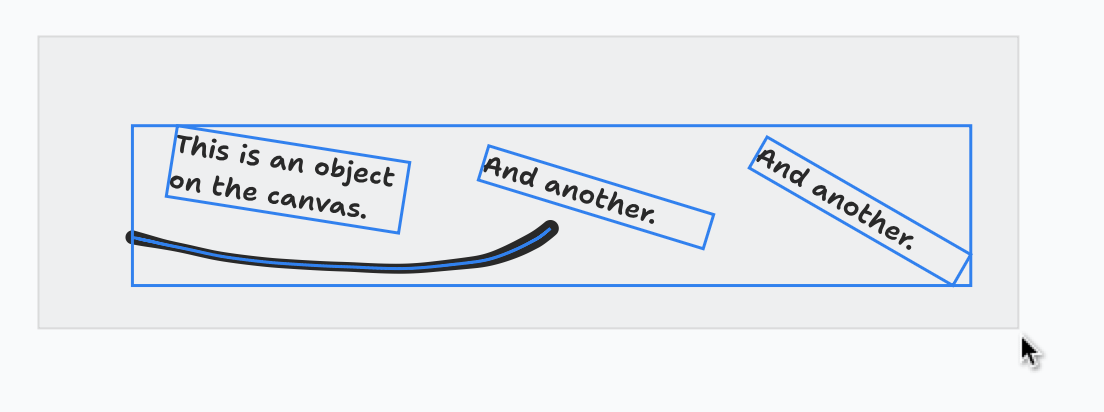

Over on the Ink team, we just wrapped up a project designing our next programmable ink system. One of the interesting developments in this project was a new approach to selecting objects on the canvas.

Imagine a typical drawing canvas, like tldraw, Cuttle, or Figma. When you make a selection, you’re always selecting something specific. You might directly click to select a single object. Or you may drag a selection marquee to cover a region of space, selecting the objects that occupy it. The former is incredibly precise. You’re saying “I want this one object”. The latter is ever so slightly more approximate. You’re saying “I want any objects in here.”

A programmable system allows a wider range of specificity. You might have some deeply nested data, and make a selection by reaching for a known place within the structure: myObject.nested.element.to.select. But you can also create a selection dynamically by matching a pattern, evaluating some criteria, or issuing a query:

dotfile = /(^|[\/\\])\../

if filename.match(dotfile) then log("unix strikes again")

These later approaches make selection very flexible. You empower a program to do the selection for you at some point in the future, rather than selecting something yourself right this instant. You don’t know what exactly will be selected, but you do know some qualities it should possess.

When writing the code to perform a dynamic selection, you might have a few concrete examples to generalize from. But it’s up to you to identify the pattern and express that in programming terms. You have to take a step away from concrete, into the abstract. This is the cost of code — you gain flexibility by sacrificing tangibility. Each of the myriad approaches to dynamism feel different, and offer different tradeoffs at this step.

For our programmable ink system, we want an approach to dynamic selection that feels like a natural progression from the concrete selection of objects on a canvas. Not something like regex or a query DSL, where the way you describe a selection doesn’t much resemble the stuff being selected, especially if that stuff is graphical in nature. If our programs are going to be querying and effecting stuff on a canvas, they should be programmed right on that same canvas using that same stuff.

For a few years now, we’ve been exploring an approach to dynamic selection called spatial queries. In Inkbase, every object would keep track of the objects around it, so you could for instance ask any object for the things to its right. But “to the right” is a bit vague, and clarifying “50 pixels to the right” feels like coding, not like drawing. It’s also a bit too rigid — “50 pixels to the right” is less sketchy than “the rest of the sentence” or “this entire doodle”, and in our programmable ink system we prize sketchiness.

Over the past few months, while designing our new programmable ink system, we’ve been playing with a new idea for dynamic selection. We call it flux. It’s spatial queries as an object you can see and touch.

You draw flux on the canvas just like ink, and then it spreads out from where it was drawn, flowing and pooling like a liquid. It can spread through space, or through ink — your choice. When flux floods the inside of a region encircled by ink, it resists flowing through any small gaps. When it spreads along ink strokes, it can jump across small gaps.

Ink that is engulfed by flux is treated as a selection, just as though you’d selected the ink by hand. And you can use any of these selections (from flux or by hand) as the input to a programmed behaviour.

Flux will continue to flow and retract in reaction to changes in the ink around it. If you pull some flux-covered ink away, the flux withdraws. If you write or draw close to some flux, the flux will spread to encompass your newly made strokes. It’s a dynamic query that you can draw, see, and manipulate right on the canvas. It exists somewhere in between a marquee or lasso, which encompasses a region of space, and a reactive query, which continually feeds values into a computation.

We tried to make the experience of working with flux feel very much like other types of drawing and selection on the canvas. You can even tug some flux to guide how it will spread, which is helpful for selecting (say) all the text to the right of a checkbox.

Behind the scenes, flux works a bit like a hybrid of a particle system and a cellular automata. There are rules governing how the bits of flux relate to their neighbouring bits of flux, and how they react to other elements on the canvas.

We’re still exploring how flux interacts with other parts of our programmable ink system. How exactly should flux confer effects upon the things it selects? Do we want flux to eventually cool into a more static state? Or do we want flux to be even more dynamic, to spread and retract on command by a script? We don’t yet know what exactly flux is, how it works, or what it means, so you can look forward to hearing more about it in the coming months.

Researchers-in-residence

Alexander Obenauer has wrapped up his term as a researcher-in-residence with the lab. We’re excited to keep following his work on personal health informatics and more.

We’re thrilled to welcome a new researcher-in-residence: Maggie Appleton! She’ll be exploring ideas at the intersection of tools for thought and language models—more details to come in future dispatches.

What’s a few more open tabs?

That’s all for now, until next time.